Tutorial

Starlake is not an orchestrator. It generates DAGs for you to run on your orchestrator of choice.

Now that our load and transform are working, we can run them on our orchestrator.

Starlake can generate DAGs for different orchestrators like Airflow, Dagster, and Snowflake Tasks.

Prerequisites

Make sure you run the Transform step first to get the data in the database.

On Snowflake, you need to login with a role with the CREATE TASK and USAGE privileges.

Running the DAG

Using starlake dag-generate command, we can generate a DAG file that will run our load and transform tasks.

starlake dag-generate --clean

This will generate your DAG files in the root of the dags/generated directory.

You may also 'dry-run' the DAGs to see if they are working as expected.

python -m ai.starlake.orchestration --file {{file}} dry-run

where {{file}} is the path to the DAG file you want to run (dags/generated in our example).

- In Snowflake: This will display all the SQL commands that will be run.

- In Airflow: This will run de DAG in test mode.

- In Dagster: This will run de DAG in local mode.

We are now ready to deploy the DAGs directly on our orchestrator.

python -m ai.starlake.orchestration --file {{file}} deploy

This will deploy the DAGs to your orchestrator.

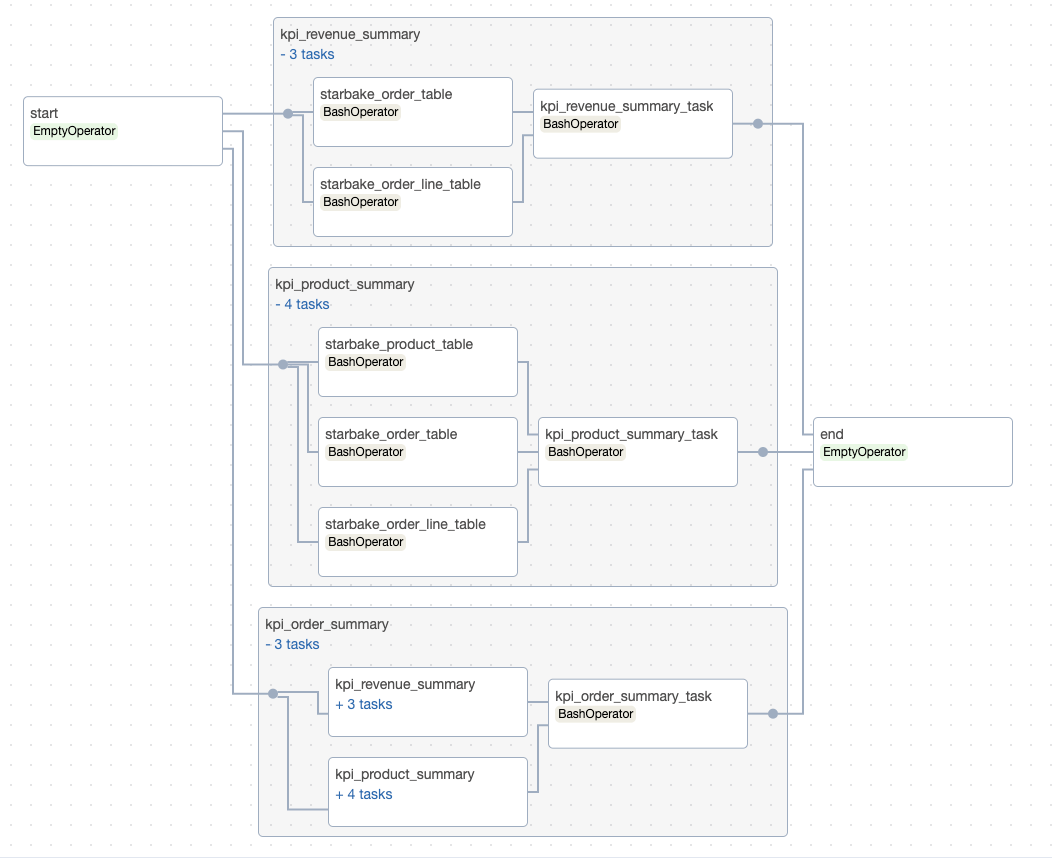

- Airflow

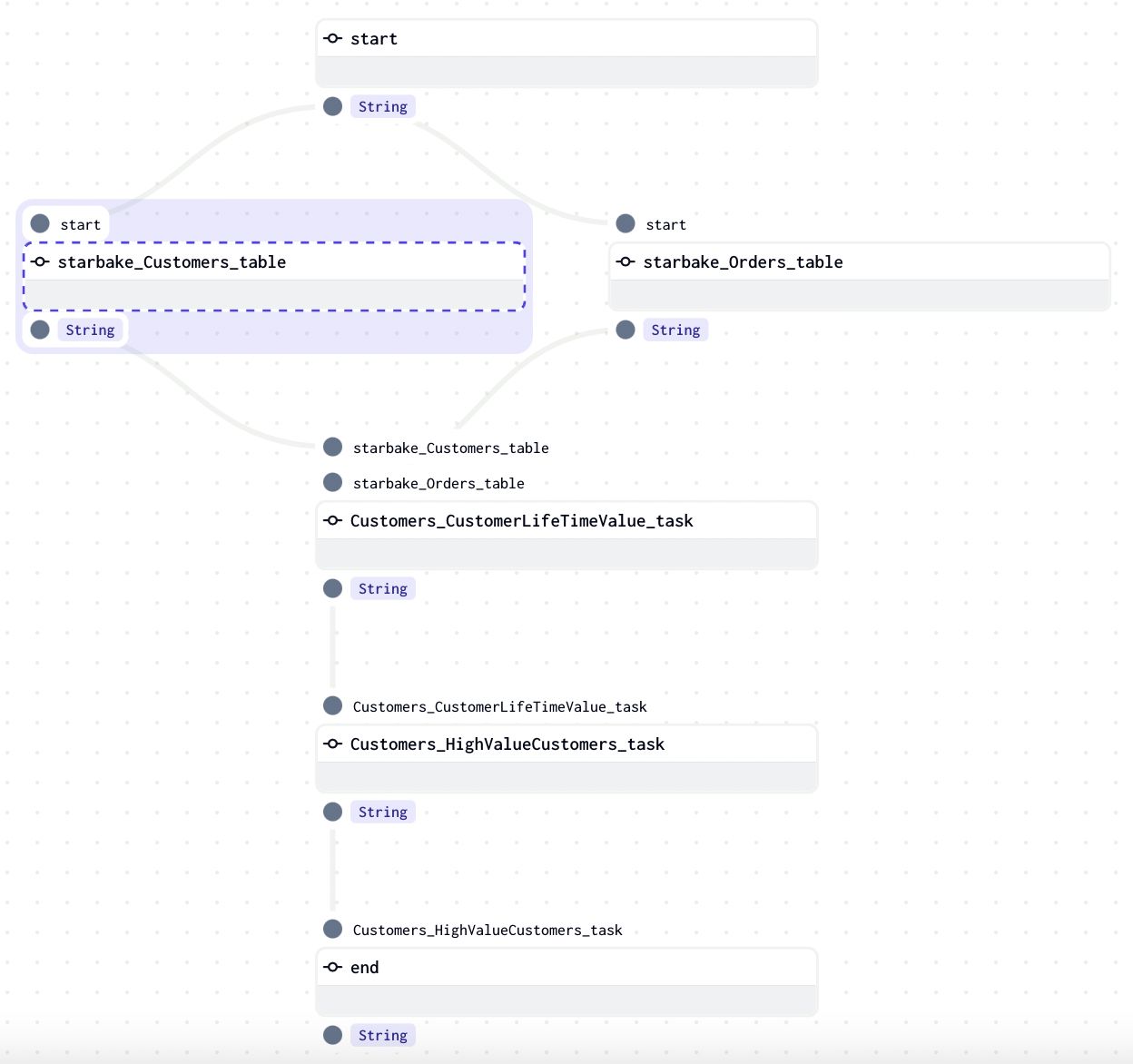

- Dagster

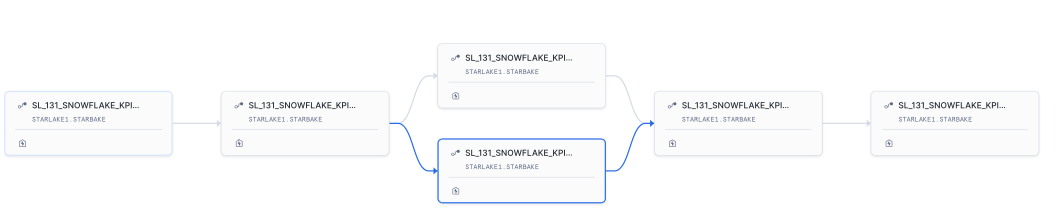

- Snowflake Tasks

Backfilling

You can backfill the DAGs to run them on a specific date range.

python -m ai.starlake.orchestration \

--file {{file}} backfill \

--start-date {{start_date}} \

--end-date {{end_date}}

Where {{start_date}} and {{end_date}} are the start and end dates of the range you want to run the DAGs on.

This will run the DAGs on the specified date range.

Configuration

The DAG generation is based on the configuration files located in the metadata/dags directory.

You put there the configuration files for the DAGs you want to generate and reference them globally in the metadata/application.sl.yml file

or specifically for each load or transform task through the dagRef attribute.

- Airflow

- Dagster

- Snowflake Tasks

dag:

comment: "default Airflow DAG configuration for load"

template: "load/airflow_scheduled_table_bash.py.j2"

filename: "airflow_all_tables.py"

options:

pre_load_strategy: "imported"

dag:

comment: "default Airflow DAG configuration for transform"

template: "transform/airflow_scheduled_task_bash.py.j2"

filename: "airflow_all_tasks.py"

options:

load_dependencies: "true"

dag:

comment: "default Dagster pipeline configuration for load"

template: "load/dagster_scheduled_table_shell.py.j2"

filename: "dagster_all_load.py"

options:

pre_load_strategy: "imported"

dag:

comment: "default Dagster pipeline configuration for transform"

template: "transform/airflow_scheduled_task_bash.py.j2"

filename: "airflow_all_tasks.py"

options:

load_dependencies: "true"

dag:

comment: "default Snowflake pipeline configuration for load"

template: "load/snowflake_load_sql.py.j2"

filename: snowflake_{{domain}}_{{table}}.py"

dag:

comment: "default Dagster pipeline configuration for transform"

template: "transform/snowflake_scheduled_transform_sql.py.j2"

filename: "snowflake_{{domain}}_tasks.py"

options:

load_dependencies: "true"

pre_load_strategy(none by default):

You can specify an optional preload strategy to conditionally load a domain.

Valid values are: imported, ack, pending, or none. If not set, the default strategy is none.

- imported: Loads files from the stage directory.

- ack: Loads files only if an acknowledgment (ack) file is present in the stage directory.

- pending: Loads files from the pending directory.

- none: Disables preloading.

The preload strategy acts as a lightweight pre-check. It simply checks for the presence of files based on the selected strategy. If no matching files are found, the process exits silently—without raising an error or consuming additional resources.

load_dependencies: "true" is a flag that tells the DAG generator to include for transformations, the dependent tables or tasks in the DAG file.

template is the template file that will be used to generate the DAG file. This ay reference :

- an absolute path on the filesystem

- a relative path to the

metadata/dags/templates/directory - a template that is built-in in the starlake library and is located in the load and transform resource directories.

The load and transforms tasks will be run as bash commands on the orchestrator. To run them on a different executor, you can change the template to a different one or build your own. Starlake comes with templates out of the box.

On Snowflake, the tasks will be run as native snowpark tasks.