SQL Transforms

SQL transforms are used to materialize SELECT statements using a user defined strategy.

SELECT statements

SQL transforms are SELECT statements materialized using a user defined strategy. The result of the SELECT statement is then used to populate the target table by applying the write strategy defined in the corresponding YAML configuration file. The SELECT statement can be as simple or as complex as needed.

The only requirement is that the result of the SELECT statement must match the schema of the target table if it exists, otherwise starlake will infer the schema.

Column description

Most of the time you won't need to document the columns in the SELECT statement as starlake will infer the schema from the result of the SELECT statement and the column documentation from the source table column description.

You may need to document the calculated columns in the SELECT statement as they don't exist in the source tables.

You can do that by adding setting the attributesDesc key in the YAML configuration file.

task:

...

attributesDesc:

name: <column_name>

comment: <column_description>

...

Custom write strategy

If none of the predefined write strategies fits your need, you can write your own update/insert/merge statements directly in the SQL file instead of letting starlake convert your select statements into update/insert/merge statements.

But in that case, you will need to set the parseSQL (true by default) to false in the YAML configuration file.

task:

...

parseSQL: false

...

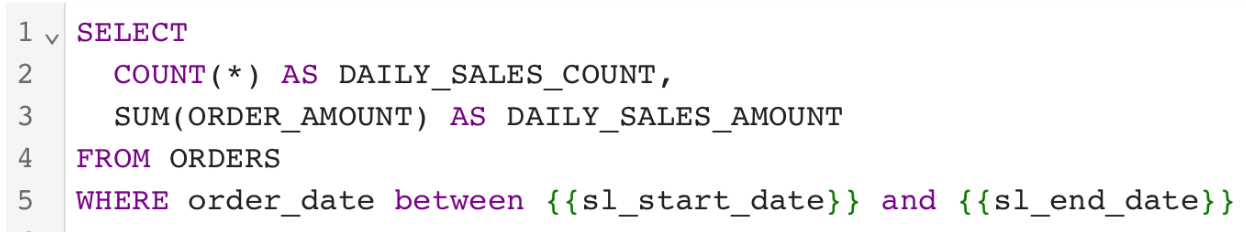

Incremental modelling

As data arrives periodically we have to build KPIs on that new data only. For example, the daily_sales KPI does not need the whole input dataset but just the data for the day we are computing the KPI. The computed daily KPI are then appended to the existing dataset, greatly reducing the amount of data processed.

Starlake allows you to write your query as follows:

The sl_start_date and sl_end_date variables represent the start and end times for

which the query is being executed. These are reserved environment variables that are automatically

passed to the transformation process by the orchestrator (e.g., Airflow, Dagster or Snowflake Tasks).

You might wonder what happens if the job fails to run for a specific day.

There's no need to worry—Starlake handles this seamlessly by leveraging Airflow's

Catchup mechanism. By configuration, Starlake requests the orchestrator

to catch up on any missed intervals. To enable this, simply add the catchup flag

to your YAML DAG definition in Starlake, and as expected the orchestrator will run the missed intervals using

the sl_start_date and sl_end_date variables valued accordingly.